How to Think About Time in Programming

Date published: Jun 23, 2025 • Hacker News discussion

Time handling is everywhere in software, but many programmers talk about the topic with dread and fear. Some warn about how difficult the topic is to understand, listing bizarre timezone edge cases as evidence of complexity. Others repeat advice like "just use UTC bro" as if it were an unconditional rule - if your program needs precise timekeeping or has user-facing datetime interactions, this advice will almost certainly cause bugs or confusing behavior. Here's a conceptual model for thinking about time in programming that encapsulates the complexity that many programmers cite online.

Absolute Time

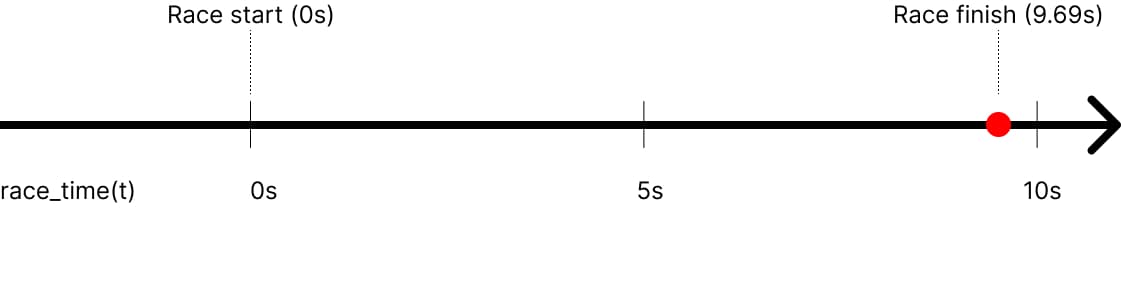

Two important concepts for describing time are "durations" and "instants". A duration is like the number of seconds it takes Usain Bolt to finish a 100m race; an instant is like the moment that he crossed the finish line, or the moment he was half-way through the race. The concept of "absolute time" (or "physical/universal time") refers to these instants, which are unique and precisely represent moments in time, irrespective of concepts like calendars and timezones.

Usain Bolt at the Beijing 2008 Olympics (Photo from boston.com)

How can we refer to two instants in a way that describes which instant came first and what the duration is between them? Labeling them like "The instant Usain finished the race" or "The instant Neil Armstrong first stepped on the Moon" doesn't provide this information (it's like how labeling places as "home" or "store" doesn't communicate the distance between them). One way to precisely refer to instants is by their duration from some reference point: the instant Usain crossed the finish line is the instant 9.69 seconds from the reference point when the race starting pistol went off.

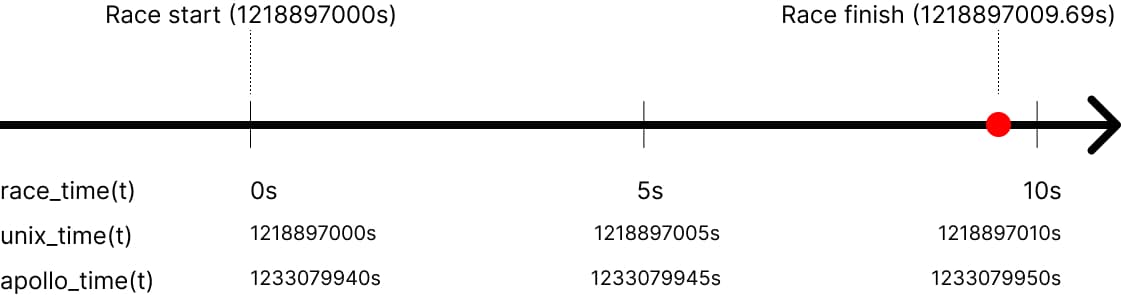

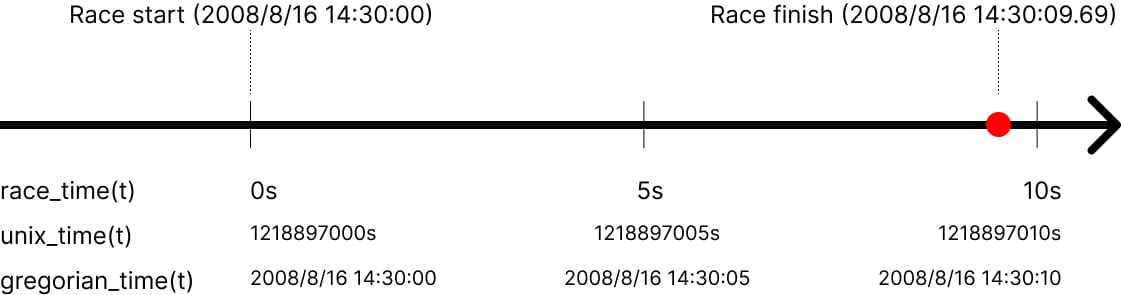

Programmers call this reference instant an "epoch" - it's the instant whose number representation is 0 seconds. The epoch could be any instant: Many systems support Unix time, which uses the Unix epoch (Jan 1st, 1970 00:00:00 UTC), but other epochs work too (e.g. Apollo_Time in Jai uses the Apollo 11 rocket landing at July 20, 1969 20:17:40 UTC).

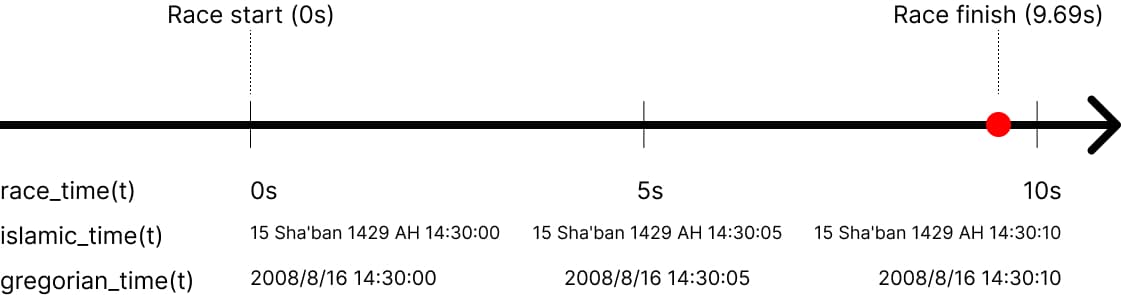

Each row of labels represents the same absolute instants in time, but with different rules for what those labels look like

The importance of an epoch may feel untrue, since we talk about instants in everyday conversation without explicitly stating a reference point, but even in phrases like "last night" or "tomorrow at noon", you're implicitly assuming a reference point of the current moment and talking about an instant that's some number of seconds away from the current moment. It's like how you can say "here" or "there" because of the implicit reference point of your current position.

Civil Time

While the seconds-from-epoch model is a useful way to talk about durations and instants, humans don't generally say "Wanna grab lunch at 1,748,718,000 seconds from the Unix epoch?" To discuss time, we invented "civil time" - higher level language and standards for labeling absolute time.

The Gregorian calendar is the most common system for expressing civil time - if you can read July 20, 1969 20:17:40, then you're familiar with it. An instant is labeled as a "datetime", with year/month/day/hour/minute/second values that increment according to defined rules (e.g. "increment the day field after 24 hours"). Some regions use different "locales" to display datetimes (e.g. en-US 6/5/2025 6:00 PM vs de-DE 5.6.2025, 18:00), but the underlying information is the same.

The Gregorian calendar also defines labels for "periods" like Monday to Sunday and January to December. Think of periods as ambiguous durations: for example, a month can be anywhere from 28-31 days. The idea of "adding 1 month" to a datetime is ambiguous (in terms of the # of seconds added), but it's stable enough for humans to use periods to discuss larger durations of time.

This post focuses on the Gregorian calendar (instead of other systems like the Islamic calendar) since it's the standard calendar for most people, but all of these calendar systems do the same general thing: Each specifies well-defined rules for labeling absolute instants in time.

Modern timekeeping

Unix time and the Gregorian calendar are similar in that they are both "seconds-from-epoch" representations that can be converted between each other. Unix time counts seconds from January 1, 1970, while the Gregorian calendar counts from January 1, AD 1. We can express any instant in either system because we've "declared" reference points that link the systems together - the Unix epoch is defined in terms of the Gregorian calendar, creating an interoperable system. By representing instants as durations from an epoch, computers can more easily perform arithmetic and comparisons (e.g. checking if an instant comes before another instant is a numerical < operation).

Theoretically, all computers could use the same epoch for timekeeping, but different epochs make more sense depending on requirements. For example, if your program only handles datetimes from 1970 - 2038, Unix time can represent them using 32-bit integers (so you potentially reduce memory and CPU usage by keeping the representation to 32 bits).

But how do we know the current absolute time? If today is 6/8/2025 00:00:00 how do we track that 86,400 seconds have passed since 6/7/2025 00:00:00, and so on extending back to Jan 1, AD 1? The history of human timekeeping is long, but here's a short version: For a long time, humans based timekeeping on the Sun; they operated under the assumption that a day has 24 hours based on Earth's rotation around its axis, and that a year has 365 days based on Earth's orbit around the Sun. A second was defined as 1/86400 of the average "solar day" (the duration it takes Earth to rotate so that the Sun returns to the same position in the sky. This requires ~361° of rotation since Earth also orbits the Sun and needs to rotate an extra ~1° to compensate).

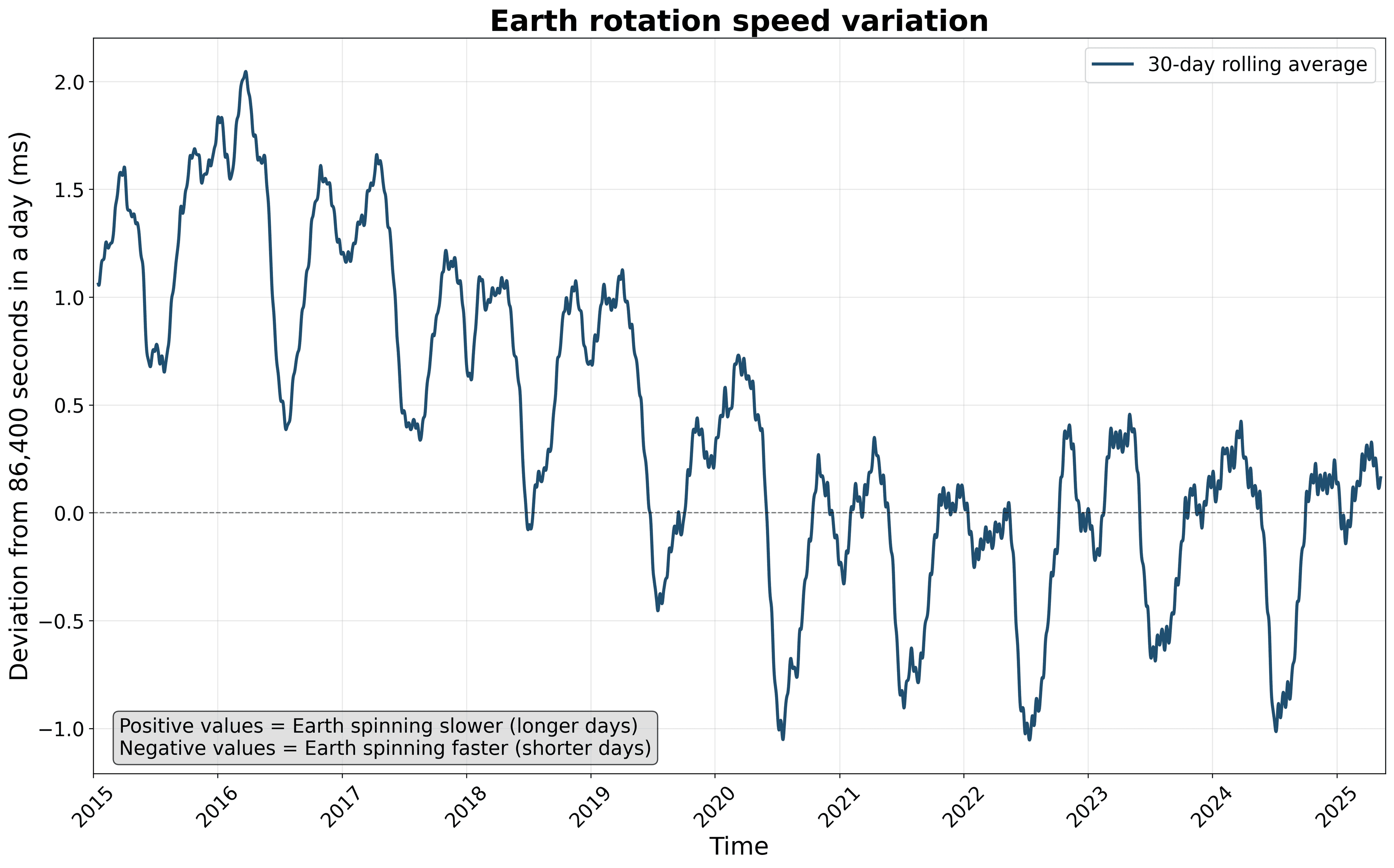

However, this model is technically wrong. Earth's rotation speed fluctuates over time, partly due to climatic and geographical forces; the overall trend is a ~1.8ms slowdown every 100 years.

Earth's rotation speed was ~0.73ms longer than 86,400 seconds on May 24, 2025 (Graph is my own, generated with IERS data)

Moreover, Earth takes ~365.25 days to orbit the Sun, not 365. The Gregorian calendar accounts for Earth's 365.25-day orbit using leap years (where February occasionally has an extra day). However, it doesn't account for Earth's slowing rotation. This means that defining a second in terms of Earth's rotation is imprecise, because that duration will vary! If we kept this simplistic model, then over time, our 24-hour clocks would slowly drift out of sync with the Sun's position, leading to absurd situations like regularly seeing the moon at noon… A separate problem is how the local time will be different for people around the globe if everyone independently tracks the Sun.

To continue using the Gregorian calendar and 24-hour clocks while staying in sync with Earth's movement in space, humans created two main solutions:

UTC

UTC (Universal Time Coordinated) is a time "standard" used to synchronize clocks globally. The standard precisely defines the duration of a second (as the duration of 9,192,631,770 periods of the radiation of cesium-133 atoms). This "SI second" is a more stable, precise definition than "1/86400 of a solar day". The International Earth Rotation and Reference Systems Service (IERS) tracks the current UTC time by averaging hundreds of atomic clocks globally. Clocks in computers and other devices indirectly sync with this consensus time through mechanisms like NTP (Network Time Protocol) servers that broadcast time information.

The IERS also occasionally introduces leap second adjustments to sync the current UTC time with Earth's rotational position. Here's what this looks like practically: Adding a leap second looks like the clock going from 23:59:59 to 23:59:60 (i.e. one more second "happens" before 00:00:00), and subtracting a leap second looks like skipping from 23:59:58 to 00:00:00. Since 1972, 27 leap seconds have been added (none have been subtracted, but it's technically possible). These adjustments are irregular and unpredictable, usually decided a few months in advance (the last one was on December 31, 2016).

What this all means is that we continue using the Gregorian calendar, but a day may have 86401 or 86399 seconds (not always 86400), and so when calculating the duration between days, you have to do number_of_days * 86400 + leap_seconds_delta (where leap_seconds_delta is the sum of all positive and negative leap second adjustments between the two datetimes). So it's still possible to label instants and represent precise durations using the Gregorian calendar and UTC, but you need to know when leap second adjustments occurred so that you can account for those when computing precise durations.

But who is "inserting" leap second adjustments? No one is "physically" inserting them; rather, the IERS modifies the UTC standard to state that a leap second adjustment will occur at a future date (a few months in advance). Systems that care about precise UTC timekeeping must then update their leap second data to account for it (e.g. by ensuring they account for the leap second adjustment when computing the duration between 2 datetimes where one is before and one is after the leap second). In any case, the leap second system has caused various problems in the past few decades, so it has been decided that the leap second system will be abandoned by 2035.

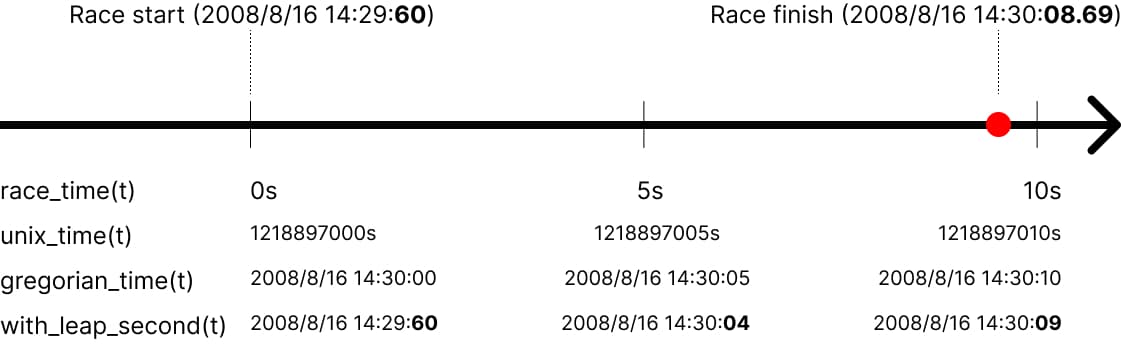

Leap seconds tend to be inserted at midnight, but to visualize what that instant would look like, let's assume that a positive leap second adjustment was scheduled to be inserted at the start of Usain Bolt's 100m race:

The key insight is that 2008/8/16 14:30:08.69 still represents 9.69 seconds after the race started, even though the datetime looks like only 8.69 seconds elapsed. When computing the precise duration between datetimes like 2008/8/16 14:29:59 and 2008/8/16 14:30:09, we know that we're using the UTC time standard, and so you have to check for any leap second adjustments between the two datetimes and account for them accordingly (so in this case, the duration between the two datetimes is 11 seconds, because 10 seconds + 1 leap second).

This is important to understand if your program needs precise timekeeping. JavaScript's Date object (based on Unix time), for example, doesn't account for leap seconds at all (i.e. it doesn't use "true" UTC). When calling Date.now(), the returned number of milliseconds is actually less than the number of milliseconds that have elapsed "in real life" since January 1st, 1970. This may or may not cause bugs depending on program requirements.

Timezones

UTC allows humans to coordinate on the current absolute time, but it doesn't directly solve how the Sun is in different positions for people globally. Timezones allow humans to continue using civil time as usual (where "12PM" looks similar globally in terms of the Sun's position in the sky) while still being synced to UTC. In other words, practically all humans use UTC, but they express that UTC time slightly differently, and timezones define how to convert between these local expressions and the "baseline" expression of UTC+0.

Disclaimer: People disagree on the best definitions for "timezone", "standard time", and related concepts, but I'll clearly specify the best definitions in my view below.

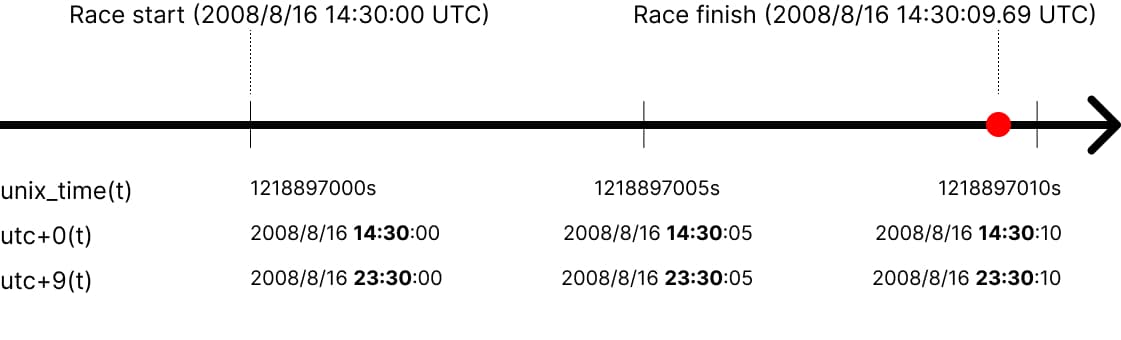

A "timezone" refers to the regions of Earth that follow the same rules for civil time. These rules generally look like a "standard time" that specifies a UTC offset - for example, Japan Standard Time (JST) has offset UTC+9, meaning that the absolute time of 2008/8/16 14:30 UTC+0 looks like 2008/8/16 23:30 UTC+9 in JST.

Most standard times fall between UTC-12 and UTC+12, but this isn't a hard rule; in fact, a UTC+14 actually exists in the Line Islands! Regardless of the UTC offset, it's all the same absolute instants in time, just with different labels to refer to those instants. Neil Armstrong took his first step on the moon one time in history - he didn't take another first step an hour later for the next timezone over...

Note: GMT (Greenwich Mean Time) is the standard time used in places like the United Kingdom, defined as UTC+0. The acronym GMT has a lot of history behind it, causing people to mean different things when referring to it (e.g. some people are referring to the mean solar time at the Royal Observatory in Greenwich, where time was measured in astronomic terms instead of using atomic clocks), but these days, people interchangeably saying "GMT" or "UTC" are usually referring to the idea of UTC+0 (i.e. the baseline way to refer to absolute instants in time).

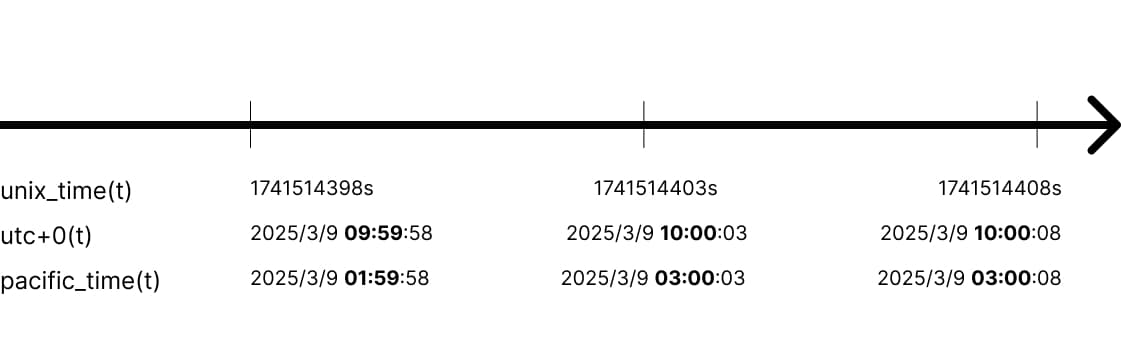

People often assume that timezone rules are always singular, static UTC offsets, but that's not true: for example, California alternates between Pacific Standard Time (UTC-8) and Pacific Daylight Time (UTC-7) every year. The "Pacific Time" (PT) zone refers to the set of regions (like California) that follow both PST and PDT. Moreover, not all timezone rule changes are predictable: for example, Samoa changed their standard time from UTC-11 to UTC+13 in 2011 to better align their civil time with nearby economic partners.

Pacific Time transitioned from PST (UTC-8) to PDT (UTC-7) at Sun 09 Mar 2025 02:00, causing the local time to "spring

forward" from 02:00 to 03:00

It's easier to understand this sudden jump from 1:59 to 3:00 when watching the change alongside the corresponding UTC offsets:

# Pacific Time

1:58 -08:00

1:59 -08:00

3:00 -07:00

3:01 -07:00

# UTC+0

9:58 +00:00

9:59 +00:00

10:00 +00:00

10:01 +00:00The civil times from the Pacific Time zone refer to the same absolute instants as the UTC+0 labels; what changed is the UTC offset used to generate those labels.

The thing to realize is that timezones aren't official "entities" defined by some international organization - rather, local governments determine the time rules of their localities, and then a timezone refers to the emergent grouping of regions that follow the same rules of civil time. (i.e. a "timezone" is useful language to refer to this group of regions, not an official "entity" that governments directly control. Local governments instead change the standard times they follow, and then that may change how we group regions together in timezones based on who shares what rules of civil time now).

Part of the reason why terms gets confusing is that you can technically think of a standard time as a timezone: Instead of thinking of GMT as a rule defined as UTC+0, you can also think of it as a timezone of regions like the United Kingdom and Ireland where, for some portion of the year, they follow the rule UTC+0.

The problem with treating standard times as timezones is that they're unstable: The United Kingdom only follows GMT (UTC+0) for part of the year because they switch to BST for summer. Treating GMT and BST as separate timezones would mean that United Kingdom residents need to select multiple timezone options in software…

So grouping regions based on who has the same local time or is currently following the same standard time is unstable. This is why timezone UIs in software generally list timezones that are stable year-round (i.e. every region in the timezone shares the same present rules and projected future rules for civil time, assuming no legislation changes happen). So instead of selecting PST or PDT, California residents select something like "Pacific Time (PT)".

Note: Some timezone UIs confusingly will show something like "GMT" to refer to "the timezone that follows both GMT and BST".

The fact that UTC offsets can change in a timezone has some interesting implications that'll be easier to see by converting the above 1D timeline images into 2D graphs:

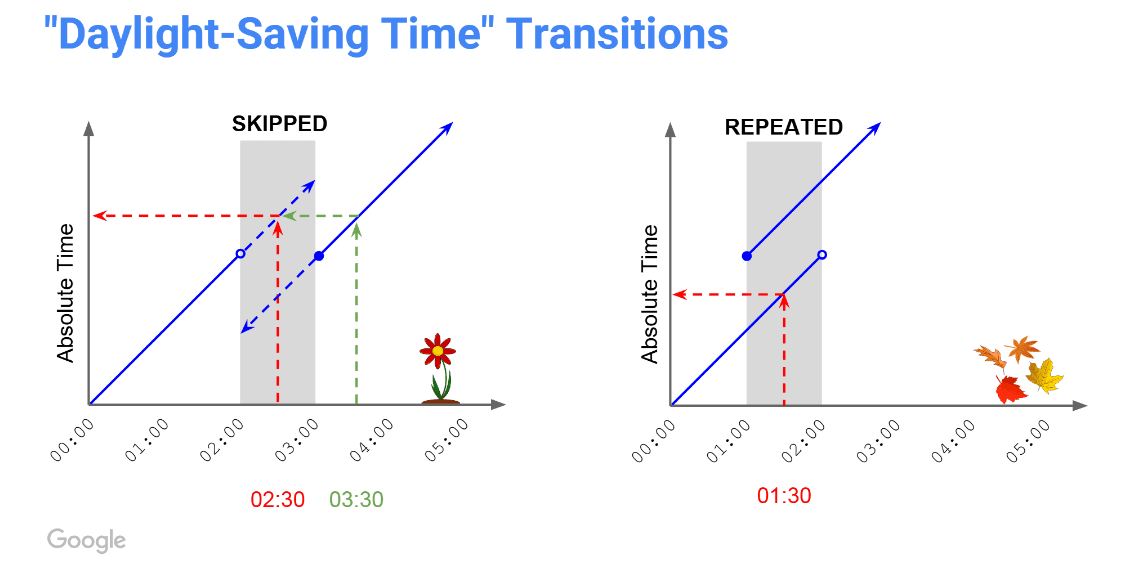

"Spring forward" and "fall back" graphs (Source: CppCon 2015: Greg Miller "Time Programming Fundamentals")

If the UTC offset moves backwards by 1 hour, then one hour of civil datetimes are repeated (the same principle applies for moving backwards by any other arbitrary amount of time). This means that the absolute instant in time corresponding to 09 Mar 2025 01:30 is ambiguous. Similarly, if the UTC offset moves forward by 1 hour, then one hour of civil datetimes are skipped/non-existent - 09 Mar 2025 02:00 - 02:59 will never happen.

You can encapsulate all of this complexity with two functions:

-

local_time = f(timezone, absolute_time)- Every

absolute_timemaps to exactly onelocal_timefor a giventimezone; this is true for all possible inputs to the function.

- Every

-

absolute_time = f(timezone, local_time)- Every

local_timecan map to 0, 1, or 2+absolute_timevalues for a given timezone. Generally, alocal_timewill map to exactly 1absolute_time, but it's possible that the inputlocal_timeis non-existent (due to a forward UTC offset transition) or ambiguous (due to a backwards UTC offset transition). Different implementations handle these two cases differently (e.g. throwing an exception or selecting the latest valid time).

- Every

Google Calendar chooses the first valid civil time to the right of the invalid one when attempting to input a non-existent value (due to a "spring forward" transition from 02:00 to 03:00 in the Pacific Time zone)

What all this means is that:

- Unix time and the Gregorian calendar have slightly different goals. The Gregorian calendar and UTC (with leap seconds and leap years) help sync timekeeping to the Sun, whereas Unix time is more about representing precise instants and durations (ignoring concerns around leap seconds for example). Civil timekeeping isn't the same thing as precise time measurement.

- Leap seconds and timezones are why a civil day is a "period" (i.e. an ambiguous duration): its duration may be less/greater than the duration of a "physical"/absolute day (86,400 SI seconds) due to leap second adjustments or UTC offset transitions.

- The exact duration between 2 civil datetimes may not be immediately obvious due to potential leap second adjustments and UTC offset transitions between those two datetimes.

- Computers treat time in a mathematically precise/well-defined way: humans "declared" the Unix epoch to be January 1 1970 00:00:00 UTC+0, and atomic clocks allow us to precisely calculate time elapsed since then such that we know the current time is 06/08/2025 07:37PM (in California at the time I'm writing this post). However, this precision breaks down the further back we go: UTC and atomic timekeeping only began around the mid-20th century, so pre-atomic timestamps lack "true" second-level precision, and even day-level precision is iffy due to calendar reforms (e.g. the Gregorian calendar is actually a successor to the Julian calendar), inconsistent historical record-keeping, and Earth's unstable rotation speed. When we retroactively refer to the datetime "Jan 1 1600 00:00:00 UTC+0", people alive at that absolute instant (some precise # of SI seconds ago) may not have labeled that instant in the same way! Maybe they'd say January 5 1600 (i.e. maybe our timekeeping is off by a few days and that inaccuracy has been lost to time)! However, certainly for datetimes after ~1970, we can be confident in the accuracy of our timekeeping.

Synthesis

The above conceptual model is all you need to effectively understand how to handle time in programming broadly speaking and make sense of common, misleading advice online. A lot of the commonly cited "gotchas" around timezones and leap seconds can be encapsulated with this point: You can't make assumptions about the validity and progression of civil time that generalize for all timezones. Every region's UTC offset can change by any arbitrary amount at any arbitrary moment, meaning that you have to know the history of time rule changes as well as the current + projected future rules for a given timezone in order to know how civil time progresses every SI second. Think of timezone rules as arbitrary, ever changing UTC offsets. Civil time and UTC are politically/socially convenient constructs, so it's not surprising that they will be messy and imperfect. Moreover, you can't make assumptions about precisely when leap second adjustments will occur; for example, if you wanted to compute the UTC+0 datetime that is ~158 million seconds (~5 years) into the future, you could do that computation, but if a positive leap second is added within that timeframe, then your computed UTC datetime is off by one second because it now represents 158M + 1 second. Whether this is a problem or not depends on the requirements of your program, but the point is that the advice to "just use UTC+0" because of the idea that these timestamps won't change is technically wrong and it's important to understand why.

The IANA Timezone Database

Converting absolute time (UTC+0) to civil time for a given location requires knowing what UTC offset applies at that absolute instant. For example, as I write this, Indianapolis (a city in the state of Indiana, USA) is on EDT (Eastern Daylight Time), so when converting the current absolute datetime to EDT, you'd apply the UTC-4 offset to the datetime. Similarly, the times when Indianapolis transitions between EDT and EST are well-defined, so for any future date, it's possible to check if EST or EDT will be in effect and apply the corresponding UTC offset. But what about past datetimes? Indianapolis didn't always follow EST/EDT; they used to follow CST (Central Standard Time), for example, and time rules didn't fully match New York's rules until around 2006. So to determine the local datetime in Indianapolis for the absolute instant Jan 1 2004 00:00:00 UTC, you can't project the current ruleset for Indianapolis backwards to find the relevant UTC offset. You instead need to check the historical records for what offset was active in the past. The exact UTC offset isn't easily predictable for all locations on Earth because time rules can change arbitrarily. So where can you get a detailed history of the time rules for a given location?

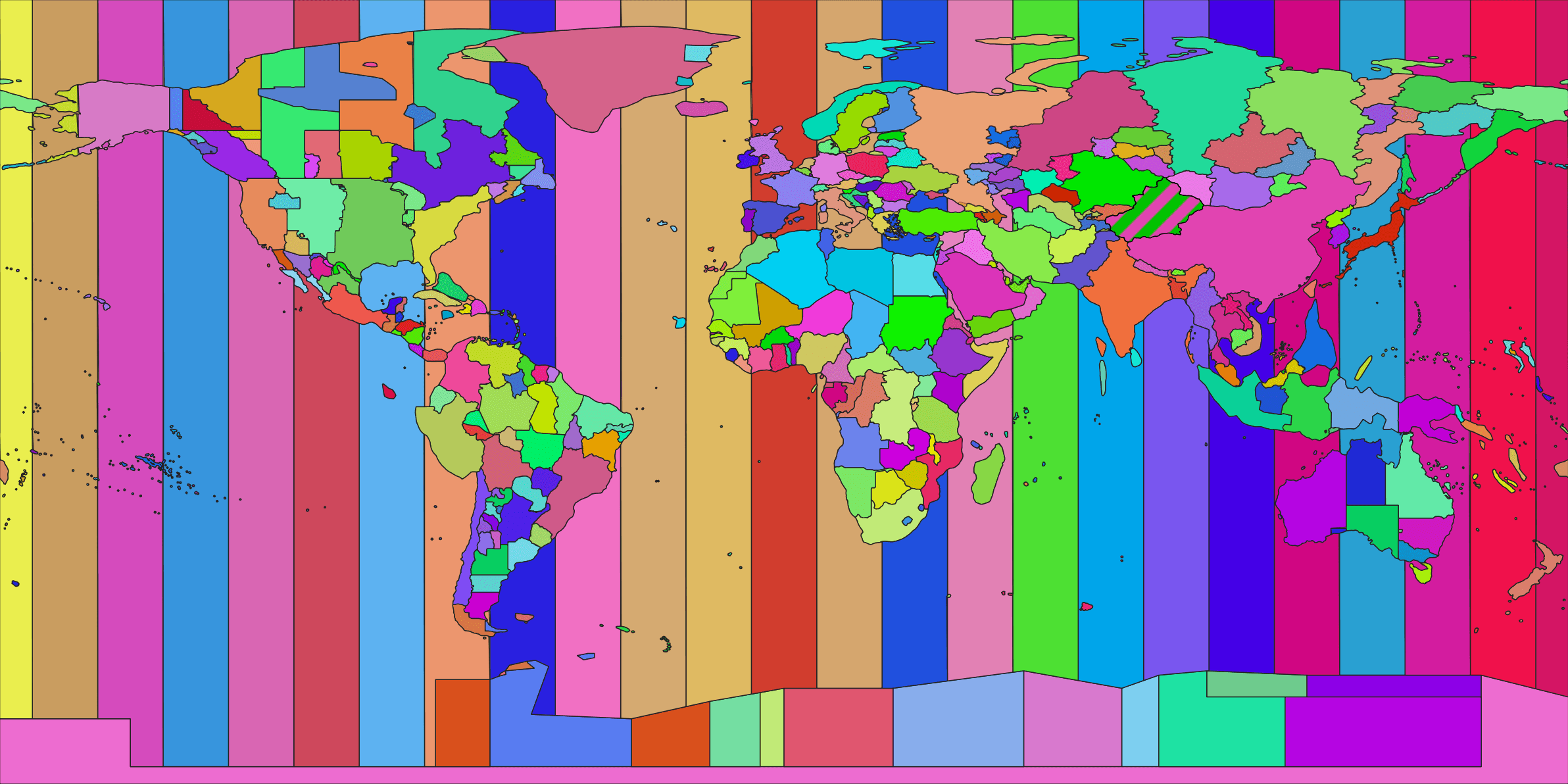

The best open-source answer is the IANA database. It's a database that tracks historical timezone rule changes globally. An IANA timezone uniquely refers to the set of regions that not only share the same current rules and projected future rules for civil time, but also share the same history of civil time since 1970-01-01 00:00+0. In other words, this definition is more restrictive about which regions can be grouped under a single IANA timezone, because if a given region changed its civil time rules at any point since 1970 in a way that deviates from the history of civil time for other regions, then that region can't be grouped with the others (this is why Indianapolis citizens use the America/Indiana/Indianapolis IANA timezone instead of the America/New_York timezone). The size of a given IANA timezone (e.g. America/Los_Angeles or America/New_York) is typically smaller than timezones like "Pacific Time" and "Eastern Time", because only regions with shared civil time history since 1970 are grouped together, whereas a traditional time zone (e.g. "Eastern Time") merely specifies the current and future UTC offset rules.

Some IANA timezones are relatively small with irregular borders (Source: Evansiroky2, via Wikimedia Commons, CC BY 4.0)

By grouping regions that share the same past, present, and future rules for civil time (i.e. the same rules from 1970 onwards), handling timezones becomes much easier: For a given location, as long as you know the corresponding IANA timezone, you can easily convert any absolute time from 1970 onwards into a civil time. Because of the above reasons, many operating systems and timezone libraries already use this database (Mac and Linux store the IANA database at /usr/share/zoneinfo; for Windows, you can convert Windows timezones into IANA timezones).

The database is updated occasionally to include recent timezone rule changes around the world, and sometimes to backfill rules from before 1970. While the database does include many rules from before 1970, those rules are not necessarily comprehensive and fully accurate: The database focuses on having an accurate history of rules from 1970 onward (and you can think of the rules from before 1970 as a standardized fiction). This is not an issue for most programs.

While timezone rules can change and there might be a slight lag before the changes are reflected in database releases, virtually all timezone scenarios can be expressed in the IANA format since every possible time rule change is expressable as UTC offset changes at given absolute instants (and in any case, most applications don't need more up-to-date data than what the standard database provides). Even if theoretically a region using UTC+0 year-round were to skip 2 days, you could express that as a UTC offset transition from UTC+0 to UTC+48 (it's totally legal, even though that'd be a weird thing to do!). This means that practically all of the bizarre UTC offset transitions that people cite online are expressable in the IANA database. (I say "almost" because a few cases that people mention are from before 1970, among other caveats).

For example, people often cite Samoa "skipping" a day as evidence of the complexity of handling timezones; here's what that instant looks like in the IANA database:

# Zone NAME STDOFF RULES FORMAT [UNTIL]

Zone Pacific/Apia 12:33:04 - LMT 1892 Jul 5

-11:26:56 - LMT 1911

-11:30 - %z 1950

-11:00 WS %z 2011 Dec 29 24:00

13:00 WS %zThe active UTC offset for Samoa changed from UTC-11 to UTC+13 at 2011 Dec 29 24:00. That's it!

For absolute instants after 1970, there's almost always an unambiguous conversion to civil time for a given IANA timezone. Most programs won't need to worry about the caveats, but I'll mention them here for completeness:

- The IANA database doesn't represent the DST rules for Morocco (

Africa/Casablanca) beyond 2087 because the rules for daylight savings time in Morocco are based on the Islamic calendar and aren't nicely projectable into the future in a way that the database can concisely represent. As a result, the database manually lists the DST transitions for every year up to 2087, and that list may be manually extended further at some point in the future unless Morocco changes their time rules. Africa/Monroviareplaced local mean time in 1972, and Nepal (Asia/Kathmandu) did the same in 1986. Local mean time isn't as precise as UTC, and so if you want precise representations of absolute instants in these timezones, you would only be able to do so after they replaced local mean time.- (I haven't verified myself if there are other IANA timezones similar to the ones I mentioned above, but I'm pretty sure that this sort of thing is rare and not something that most programs need to worry about)

"But how would I create a timezone picker where people know which IANA timezone identifier to pick? Can I deduce what IANA timezone to use if I have a location (as longitude/latitude coordinates or an address for example)?" Finding the official standard time and IANA timezone for any given person anywhere in the world at any point in history is a hard problem. If you rely on trying to deduce a timezone given a location (e.g. as an event planning website where people specify an event time and location), you are almost certainly going to make some mistakes. The best thing you can do is to let people specify the timezone that they want themselves, using something like the Unicode CLDR humanized names for IANA timezones. Also, most OSes will tell you the IANA timezone that someone is currently using (on Windows, you can use Unicode CLDR's data here to convert Windows timezones to IANA timezones), and so you can also use people's system timezone as the default in your program, and that's sufficient for many use cases.

"What happens if a region within a given IANA timezone changes their civil time rules? If their rules diverge from the rules of other regions in the timezone, then the IANA database would need to create a new timezone for that region; how exactly would I detect and handle that in my program?" The first thing to realize is that past datetimes won't have problems, because all datetimes from before the rule change (that causes a certain location to diverge into its own IANA timezone) will still be correct, because it's only the future rules that changed for the location, not the past rules. You'll only have issues if you fail to update to the correct IANA timezone when you're inputting datetimes going forward for that location. There are a few ways you could detect this:

- If you have access to the precise location (latitude/longitude) of a given entity that you have a corresponding datetime for, then you could use something like Google's Time Zone API to check if the IANA key for that location has changed. The issue though is that you don't have control over what version of the IANA database that Google is using, and there's still some margin for error (e.g. at the borders between two timezones).

- Assuming that people's system timezone on their OS gets properly updated to the correct IANA timezone, you could notice if that IANA timezone ever diverges from what the system timezone used to be, and act accordingly.

Ultimately, there's only so much you can do to detect this; luckily, most programs won't need to worry about this. If you need precise time rules, you could subscribe to the IANA mailing list where patches to the timezone database are discussed, and if you bundle a copy of the IANA database with your application, you could make changes to the data yourself manually and handle things on a case-by-case basis. Sometimes, local governments change their time rules with little notice, so there's only so much that you can do (e.g. on October 17th, 2017, Sudan gave notice for a time rule change that would go into effect November 1st, 2017, so only 15 days of notice).

Examples

Here are a few hand-picked use cases showing how to apply the above conceptual model:

Chat forums that order messages by timestamp

We care about the precise instant that messages were sent, so we can record the current time as a UTC+0 datetime when the server receives chat messages. Then, when displaying messages, the client can convert that UTC+0 datetime into the system timezone.

One thing to note is that this all assumes that the computer that the server is running on can return an accurate absolute time. When calling something like time() in C, you're requesting the current time on the machine you're running on, but it's possible for the computer's clock to be slightly inaccurate or non-monotonic (e.g. if a leap second recently occurred, your machine may be off by a second before it does an NTP server sync to get the current UTC time). This is likely not a major concern for something like a chat forum, but the general point is that depending on your program's requirements, you'll want to verify your assumptions (such as the assumption that you can actually get the correct current absolute time if that's something that your program requires).

Let's say you want to add a feature to the chat forum that allows people to schedule a message for "1 day" in the future. Well, the thing to realize is that the language "1 day" is ambiguous: Does it mean adding 24 hours (i.e. 86,400 seconds), or does it mean incrementing the day field by 1 on the civil datetime (which may mean only adding 23 hours if the person's system timezone has a daylight savings transition where the clock shifts forwards by 1 hour). This is not a major issue for this program, but it could cause confusion (and it certainly could be a major issue for other programs with stricter precision requirements).

Event planning website

Let's say I'm creating a website for users to list information for upcoming events they're hosting. Someone wants to schedule a lecture event where some people will visit the venue in-person, and others around the world will watch the lecture live on Zoom. They specify that the event will happen at 2026-06-19 07:00 at UCLA's Royce Hall (and the website uses their system timezone America/Los_Angeles to interpret that 07:00).

How should the server save this data? If you follow the advice of "just use UTC bro", you might convert 2026-06-19 07:00 UTC-7 to 2026-06-19 14:00 UTC+0 and store that datetime. But what happens now if California announces that they plan to abolish daylight savings time starting January 1st, 2026? (this idea is occasionally discussed by politicians) When you converted 07:00 to UTC+0, you were making an assumption that on January 19 2026, California would be on daylight savings time (Pacific Daylight Time). However, now that this is no longer true, the UTC+0 datetime that you stored is off by one hour! You can think of the UTC datetime that you saved as a "cached" datetime because it's based on outdated time rules. The user's intention when specifying "7AM California" was "this event will start at 7AM no matter what, even if California timezone rules change such that the absolute instant in time for the event changes. My intention is for the local clock to show 07:00 when the event starts." The behavior that the creator of this event likely expects is that the event continues to occur at 07:00 in the timezone of the venue location, meaning that the time remains the same in civil terms for local visitors of the venue, but the time changes for everyone else in other timezones (which maybe you'd handle by sending an email notification to registered event participants). One way the website could handle this is by storing the user's exact input 2026-06-19 07:00, and also store the UTC+0 version of that datetime (if we assumed that the timezone rules won't change; Edit 7/10/25: you'd also save the timezone America/Los_Angeles); this way, we can keep using the UTC+0 datetime for all logic, and we can recompute that UTC+0 datetime once we detect that the time rules for that timezone have changed.

Here's another example: What if someone was listing an in-person event for viewing an upcoming solar eclipse? The eclipse will happen at an absolute instant in time, so in this case, the time that the user specifies should be converted to a UTC+0 datetime, and then if time rules ever change, then it would be correct to keep using that UTC+0 datetime and convert it to the new local datetime for the event. So there isn't a "right" answer for the implementation; the point is to be clear on what the user's intentions are and handle those accordingly.

A personal project

Here's a specific example from my life: I'm currently working on a desktop app (Windows/Linux/Mac) that needs accurate civil datetimes from 1970 onward. Here's what I plan to do:

- Use a library that works directly with a copy of the IANA database (which I'll bundle with my application instead of relying on the OS data), and I'll periodically download the latest version of the database to keep it reasonably up-to-date. I'll store computed UTC datetimes for all inputted local times, and then for every new version of the IANA database, reconvert the local datetimes into UTC+0 datetimes using the latest IANA database version (i.e. applying any potential changes that have occured. No need to be smart for now about only recomputing for timezones that have actually changed).

- This approach to fetching the latest IANA database is similar to how time libraries like tzdata in Elixir do things.

- I'll convert Windows timezones into IANA timezones using this file from the Unicode CLDR project.

- Save the user's system timezone when the application first starts. For UI operations where users are specifying a datetime, prefill the timezone option with that saved timezone, but also allow them to change the value of that timezone dropdown, and also have a "Make default" checkbox for the timezone. This way, the default behavior is what most users want, and changing the default timezone or doing a one-off for a different timezone is not too difficult. The dropdown will use humanized names for the IANA timezone keys, which I'll get from the Unicode CLDR project here.

Tl;dr

- Humans use the Gregorian calendar and UTC for precise timekeeping that aligns with Earth's movement in space; globally, they express this UTC time in different ways, and timezones define how to convert between these local expressions and the baseline of UTC+0.

- Timezone rules can change by any arbitrary amount at any arbitrary moment; you can't create rules to predict the progression/validity of civil time that generalize for every location on Earth. This makes handling timezones look complex/difficult, but it's simple when using a good abstraction for grouping regions into timezones. The IANA timezone database only groups regions into timezones if they all have identical civil time history from 1970 onward; knowing what UTC offset to use at a given absolute instant is a single lookup in this database for a given timezone. The complexity isn't about "what UTC offset do I use", but rather the following cases (depending on your program requirements):

- How do I help users select the correct (IANA) timezone?

- How do I handle future time rule changes for a given timezone?

- How do I handle non-existent/ambiguous civil times (that come about as a result of forward/backwards UTC offset transitions)?

- How do I effectively identify and handle the user's true intentions around datetimes? (since "just use UTC bro" will not accomplish that on its own)

Open questions/topics that I'm curious about

- How is the current time tracked for TAI and UT1? How do atomic clocks work?

- How does general relativity relate to the idea of time being a universal, linear, forward-moving "entity"?

- How do NTP servers work? How do we globally sync digital and physical clocks to the current time?

- What's the history of human timekeeping? Particularly before the Gregorian calendar, what historical records do we have for who was tracking/tallying the days elapsed over time? How did people coordinate on the current date globally (if at all)? How did local mean time (LMT) work in the past?

- What's the history of tracking elapsed time? How do physical/analog clocks work?

- How is a precise

sleep(t)operation implemented? (via the operating system and hardware) - What would a better system for civil timekeeping look like? What happens if/when humans colonize other planets in terms of civil timekeeping?

- What explains the slowdown in IANA timezone database updates? Were past updates largely comprised of backfilling historical data? Are localities not changing time rules as often these days?

- It'd be great to see more concrete case studies showing end-to-end how certain programs handle time logic (since this is often significantly more informative than high level explanations)

- How do multiplayer video games work given difficulties around time synchronization and latency? (and how does that differ going from a slower turn-based game to a fast-paced first-person shooter?)

- It'd be great to read about API design for handling time. You can learn a lot by seeing how different implementations do things (e.g. C++ date library, Rust chrono library, JS Date vs Temporal vs libraries like moment/date-fns/luxon/etc, Joda Time, Noda Time).

- It'd be insightful to see how conversions between calendar systems (Gregorian, Islamic) or clocks (UTC, TAI) are implemented in certain libraries

- It'd be great to see how different programs handle the UX around selecting timezones and handling time rule changes

The Best Sources

- Time Programming Fundamentals - Greg Miller (youtube.com)

- Working with timezones - David Turner (davecturner.github.io)

- How to Think About Time - Kevin Bourrillion (errorprone.info)

Sources

- 7.3. Date and Floating Time (icalendar.org)

- Are Windows timezone written in registry reliable? (stackoverflow.com)

- cctz C++ library (github.com)

- CppCon 2015: Howard Hinnant “A C++14 approach to dates and times" (youtube.com)

- CppCon 2016: Howard Hinnant “Welcome To The Time Zone" (youtube.com)

- Current “Zulu” Military Time, Time Zone (timeanddate.com)

- Database.PostgreSQL.Simple.Time (hackage.haskell.org)

- Epoch (calendars.fandom.com)

- Epoch (wikipedia.org)

- Explanatory Supplement to Metric Prediction Generation: Chapter 2 - Time Scales, Epochs, and Intervals (spsweb.fltops.jpl.nasa.gov)

- Falsehoods programmers believe about time (gist.github.com)

- Falsehoods programmers believe about time (infiniteundo.com)

- Falsehoods programmers believe about time and time zones (creativedeletion.com)

- Falsehoods programmers believe about time zones (zainrizvi.io)

- Falsehoods Programmers Believe in (github.com)

- File:Deviation of day length from SI day.svg (commons.wikimedia.org)

- File:Timezone-boundary-builder release 2023d.png (commons.wikimedia.org)

- Fixing JavaScript Date – Getting Started (maggiepint.com)

- Galactis ISO Timestamps (youtube.com)

- Global timekeepers vote to scrap leap second by 2035 (phys.org)

- Google's new public NTP servers provide smeared time (reddit.com)

- Handling Dates, Times and Timezones in one API, a Design Rationale for the std::chrono Library (reddit.com)

- Handling Time When Programming (blog.vnaik.com)

- How Are Time Zones Decided? (timeanddate.com)

- How do you guys handle timezones? (reddit.com)

- How do you handle different timezones among users? (reddit.com)

- How do you possibly deal with timezones accuratly (reddit.com)

- How is the calendar of your world arranged and what is its reference epoch? (reddit.com)

- How to handle dates in an API when multiple timezones are involved (reddit.com)

- How to save datetimes for future events - (when UTC is not the right answer) (creativedeletion.com)

- How to Think About Time - Kevin Bourrillion (errorprone.info)

- International Atomic Time (wikipedia.org)

- Is the Greenwich Meridian in the Wrong Place? (timeanddate.com)

- John Dalziel: A brief history of Leap Seconds

- JS Dates Are About to Be Fixed (docs.timetime.in)

- Just store UTC? Not so fast! Handling Time zones is complicated. (codeopinion.com)

- Leap second (wikipedia.org)

- Leap Seconds, Smear Seconds, and the Slowing of the Earth (unsungscience.com)

- Moment Timezone Guides (momentjs.com)

- More falsehoods programmers believe about time; “wisdom of the crowd” edition (infiniteundo.com)

- North Korea’s new time zone is perfectly bizarre (washingtonpost.com)

- On the Timing of Time Zone Changes (codeofmatt.com)

- Practical Research Yields Fundamental Insight, Too (cacm.acm.org)

- Problematic Second: How the leap second, occurring only 27 times in history, has caused significant issues for technology and science. (reddit.com)

- Q: What's the best way I can detect missing/ambiguous times? (github.com)

- Scope of the tz database (data.iana.org)

- So You Want Continuous Time Zones (qntm.org)

- So You Want To Abolish Time Zones (qntm.org)

- Stopped clocks (davecturner.github.io)

- Storing times for human events (simonwillison.net)

- STORING UTC IS NOT A SILVER BULLET (codeblog.jonskeet.uk)

- Swatch Internet Time (wikipedia.org)

- Temporal (developer.mozilla.org)

- The Difference Between GMT and UTC (timeanddate.com)

- The End of Time Zones (the.endoftimezones.com)

- The Inside Story of the Extra Second That Crashed the Web (wired.com)

- The man who makes the day 86400.002s long - David Madore (madore.org)

- The One-Second War - Poul-Henning Kamp (cacm.acm.org)

- The Problem with Time & Timezones - Computerphile (youtube.com)

- The Unix leap second mess (madore.org)

- Time Programming Fundamentals - Greg Miller (youtube.com)

- Time zone (wikipedia.org)

- Time Zone Chaos Inevitable in Egypt (codeofmatt.com)

- Time Zones and Resolving Ambiguity (tc39.es)

- Time zones are hard (reddit.com)

- Timezone curiosities (davecturner.github.io)

- Timezone updates need to be fixed (creativedeletion.com)

- Usain Bolt: Positive Beijing Olympics retests are ‘really bad news’ for sport (boston.com)

- UTC is enough for everyone... right? (zachholman.com)

- What everyone except programmers knows about dates (creativedeletion.com)

- What happens after 2020 (github.com)

- What Is a Time Zone? (timeanddate.com)

- Why is subtracting these two epoch-milli Times (in year 1927) giving a strange result? (stackoverflow.com)

- Working with timezones - David Turner (davecturner.github.io)

- Wtf happened in October 1582 (instagram.com)

- Year zero (wikipedia.org)

- You advocate a ____ approach to calendar reform (qntm.org)

- Your Calendrical Fallacy Is... (yourcalendricalfallacyis.com)

- Zero-indexing the Gregorian calendar (qntm.org)

- “Leap year glitch” broke self-pay pumps across New Zealand for over 10 hours (arstechnica.com)

- "a modest proposal: fix timezones by establishing one continuous timezone with a simple model for deriving a wall time delta as a function of the longitude of both locations." (x.com)

- "pilots schedule everything in "zulu" (UMT) time. that helps make things easier for them" (x.com)

- "the majority of Spain is west of London and yet is an hour ahead" (x.com)

- "this is why I will always send you a calendar invite. language is too squishy around time" (x.com)

- Further reading (for myself when revisiting this topic):