The collective waste caused by poor documentation

Date published: Apr 12, 2025

During my programming career, I've had many instances where I've drained hours or days on a small problem in a library (either due to some bug, unexpected behavior, or a situation where I wanted to do something more specific or advanced and couldn't find a clear answer on how to do it online). I'm usually not the only person to run into these problems too - a quick Google search often reveals multiple Github issues or Stack Overflow posts asking similar questions or reporting similar problems.

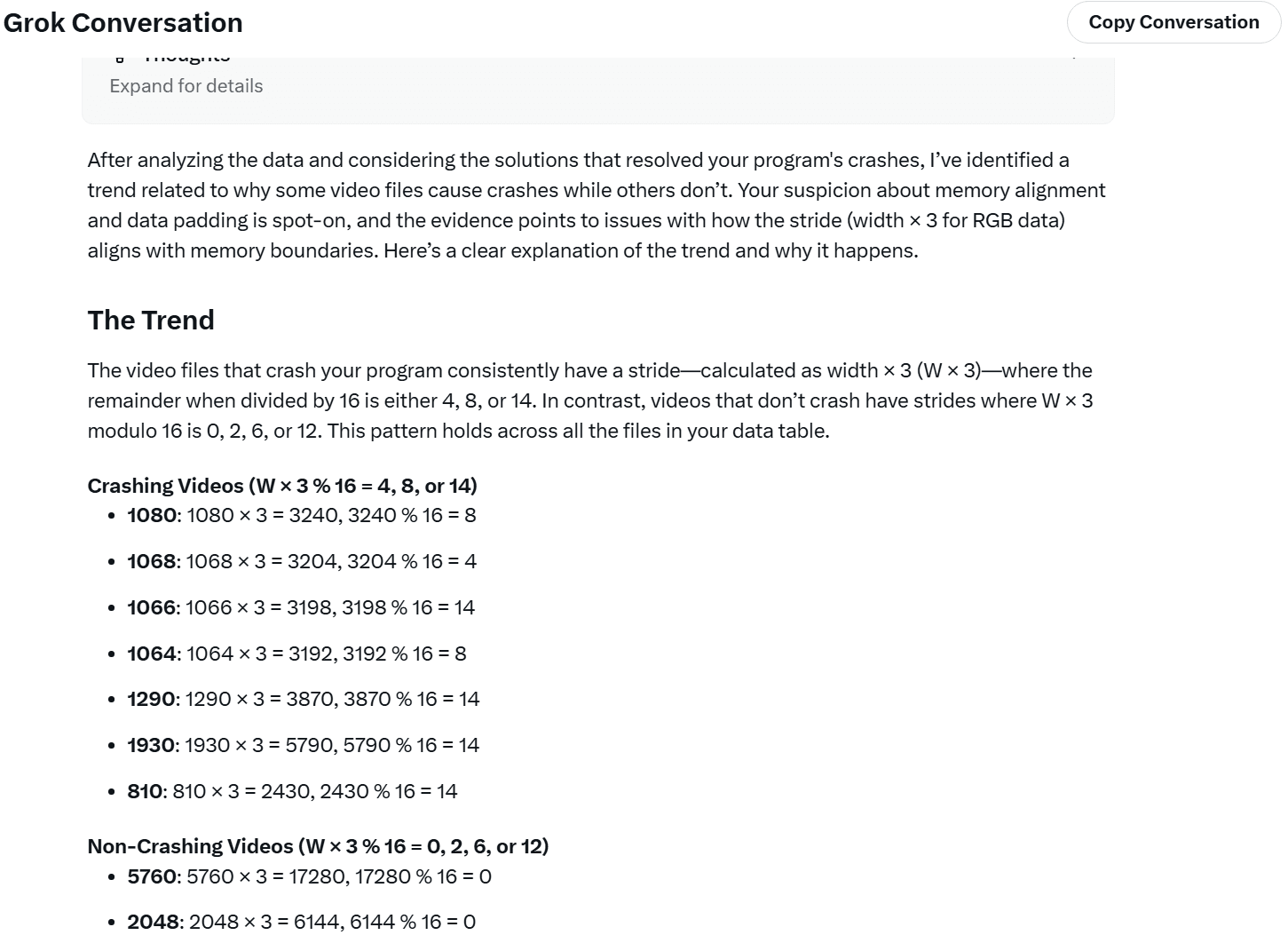

The most recent case, which reminded me of this general topic and prompted me to collect my thoughts and writing in one place, was with FFmpeg. I'm making an application involving video playback, and FFmpeg seems like the best option to quickly, flexibly implement what I'm looking for. However, I immediately ran into issues where a handful of my video files crashed the program or had visual artifacts during video playback. I ended up spending a few days debugging this, and the problem ended up being due to some seemingly undocumented linesize/stride size requirements for specific FFmpeg API functions like sws_scale. A brief Google search showed that other people ran into this same issue.

For each one of these problems, a small amount of clarity in the documentation or guidance from someone knowledgeable would have collectively saved thousands of development hours on these problems - this fact makes that time/energy feel all the more wasteful.

Or if only I could speak to someone knowledgeable, they'd be able to quickly tell me about this undocumented requirement probably. "But didn't this essentially happen? You found multiple posts online pointing out how aligned memory fixes the sws_scale issues right?" Yeah, but 1) It still took some time to identify the problem and know what to Google for, and 2) I couldn't fully, blindly trust people's claims for what fixed the problem. I didn't understand why the fix worked, and so I was concerned that there'd still be subtle bugs with the code in the future. Throwing my hands in the air and applying this suggested fix for potential buffer overflows by adding some padding bytes and calling it a day felt like it could easily bite me in the back in the future by introducing subtle, hard to trace/debug issues. So I had to dig into the source code to convince myself that the fix made sense. Moreover, I initially only encountered buffer overflow crashes. If I settled by adding padding bytes to end of my output buffer, I would have missed the edge cases with visual artifacts on the video playback, so I may not have discovered that the actual issue was around aligned linesizes until months later. (you can see more details about that in the Github issue here)

I guess one benefit of recent LLM advancements is that they can slightly supplement (but only by so much) the lack of having access to knowledgable people to answer questions; it was actually Grok (xAI's LLM model) that helped me identify the underlying pattern causing the FFmpeg bug mentioned above (I noticed patterns, but they didn't seem to hold for all inputs, and then Grok was able to articulate a general pattern for me).

For some reason, API documentation in often not great. FFmpeg use Doxygen, which I believe is largely auto-generated from the source code? It reminded me of LLVM's Doxygen documentation (funny video about that here from Jonathan Blow).

Fwiw though, I'm not sure what "good" documentation would look like. Sometimes I feel like the issue is that, even if the documentation is out there, it's either too hard to find, or it doesn't sufficiently address your specific questions/challenges (and then at that point who do you ask?)

An API reference, a getting started guide, and then a few real-world applications (where the code is relatively easy to reason about and modify) would probably be best. This is why I'm using raylib-media to start with on my current project - I've learned a lot about how the FFmpeg API works through the real, working examples for this library, and now as my needs evolve, I can use that understanding to adapt the FFmpeg API to my needs more directly (instead of through a wrapper library). That understanding would have taken longer to develop if I only had the FFmpeg documentation and had to manually experiment with how to display a video frame on the screen.

I remember I used to complain about this to myself in journals when I started programming. It relates to the concern of not knowing best practices and feeling like there isn't a place to seek/ask for clarity on those. I felt like there weren't enough clear examples of real-world projects that were 1) easily runnable (so that I can download it locally and interact with the software and make changes, which'll help understand it and get started on my own projects), and 2) relatively simple to understand. I guess though part of the issue was a lack of experience, because I don't feel this AS much anymore... (Edit 5/24/2025 08:24 PDT - Jon makes a similar point here, saying "I don't think I am very interested in most games' source code. When you are a good enough programmer, you look at something and you pretty much know how to do it. At that point it just becomes a matter of time investment and quality of execution. This wasn't true in the old days -- for example, when the original Doom came out, and then Quake after, lots of people were like, "what the hell, how is this even possible". But these days we know enough about how to make games that there is no obvious equivalent to Doom or Quake. We just know how to do things now. But if someone manages to do the equivalent of Doom in the year 2020, technology-wise, that would really be something to see!")

Anyway, maybe another time if I think it's worth getting into, I can write about open source "culture". Some notes:

- Jonathan Blow pointing out the culture of releasing something that's half-baked and hard to use. Posting your thing on Hacker News and/or Product Hunt. Grabbing people's attention for your thing that you've given a cute name for and oversold with claims like "blazingly fast".

- "We should have some kind of code of ethics for computer scientists... what that code of ethics should say is something about respecting the time of the people who use your software and respecting their quality of life, which is absolutely not done in software almost ever right now." YouTube video (4min onward), original video from Jonathan Blow Interview @ WPI 25 April 2016

- "Oh wdyt about the Windows Terminal? Wdyt about Bun? What do you think about the Carbon programming language?" (gossip)

- people making half-baked things and then releasing them for people to use and run into problems.. The idea that if you spent 2 hours fixing a bug for software that has 100 users, the net benefit to all users of the software is maybe a couple of weeks worth of time. So multiply that by multiple orders of magnitude for more widely used software.

- Preventing the Collapse of Civilization / Jonathan Blow (Thekla, Inc)

- Casey Muratori talking about library/API design. 25min onwards here

- A common point of contention around these topics is something like "open source is free, take it or else leave it and don't complain. Open source is thankless; the devs barely get paid. And yet you're confused and complaining about why these devs don't go 'above and beyond' and ensure clear documentation and APIs?". Maybe I should write about that sometime...

(I drafted out a few other examples of related patterns I'm gesturing at, but these are weaker and more loosely related. I'm keeping them here to incubate them)

(Other examples)

Full-stack web development

- This happens all the time in web development: time-consuming, trivial, silly issues with Webpack, Typescript, React, Next.js, etc.

- I remember spending days trying to deploy Migaku Experiments Platform to AWS Lightsail, and I could not for the life of me get it to work... It was my first time ever creating a backend server (instead of using Firebase for a database + authentication) and deploying it to a bare-bones Ubuntu instance, so I likely wouldn't have as much trouble now, but my point is just that it shouldn't have been that complicated! And yet somehow it was. Neither the documentation nor other external sources online were helpful enough. I eventually gave up and switched to EC2 (which has similar documentation issues, but it's so popular that I was able to follow a tutorial to get things working).

- I remember a few months ago wasting ~5 hours trying to debug vercel/@nft crashing the Next.js build of helper.ai (a project I was contributing to while working at Gumroad). I never figured out the precise cause, but moving a function definition from one file (that I was importing from) into another file (and then importing from the new file) fixed the crash... I don't remember the exact error message off the top of my head, but I remember finding other Github issues complaining about the same exception, with unhelpful suggestions for fixing it...

- A similar bug happened some time shortly after in a route handler, and the solution was also to rearrange some code to appease Next.js.

- Looks like people still have issues with that package: Github link

- I spent at least a week (50+ hrs) inhaling Next.js documentation, obscure Github discussions, and insightful blog posts to better understand React server components (RSCs), server actions, caching, and how to best use all of these features. At the end of all of that, I felt like I had a really strong understanding on the topic, and it made building things in Next.js a lot easier (I wrote more about this experience here).

- And my coworkers agreed! After leaving the company, one of them told me something like "Your Next.js leadership will be missed!", which I was flattered by, and also amused by since I had barely 12 months of Next.js experience by that time! But this is the time investment you have to put in to understand any of these technologies and wade past surface-level discourse online. It shouldn't have to be this way... Developing a surface-level, functional understanding of Next.js doesn't take long, but developing the understanding around RSCs and server actions that's needed to evaluate when/how to use them and whether people's claims online about them are valid takes much longer.

- "But doesn't mastery inherently take time for some things? Why expect you can become an expert in a day?" I'm not necessarily disagreeing with that... Maybe I'll write about the nuance some other time.

Firebase

- (in hindsight, this isn't a good or clear example. I don't precisely remember what I was struggling with in this situation, but given my current recollection, it sounds like I was just struggling as a beginner and that I simply needed practice thinking about database schemas?)

- Firebase was the first solution I ever used for adding authentication and a database to an application of mine. Hosting my own server + database seemed too complex at the time (this was in ~2018, less than 1yr into my programming career). I didn't know any best practices for key-value/document-based databases. I remember stressing a lot about this passage from the documentation:

-

Because the Firebase Realtime Database allows nesting data up to 32 levels deep, you might be tempted to think that this should be the default structure. However, when you fetch data at a location in your database, you also retrieve all of its child nodes.

- (the exact passage may have changed since then, but I vaguely remember it looking like this)

- I can't remember precisely what about this was confusing me. I maybe was just struggling to apply the ideas to my specific project (a meeting attendance tracker). I just remember stressing in the speech and debate room with a pencil-and-paper about how to structure my schema to avoid the "waste" and potential performance issues that the documentation was warning about, and I felt like I had no feedback to know if the schema I settled on was the "best" or if it had issues. I couldn't find or piece together a clear/certain answer from the documentation or other online sources (like Stack Overflow). I spent at least a few days I think banging my head about it before I settled on a schema (and I think that time/effort felt unnecessary or like a waste of time to me, because I thought "surely this is a solved problem... and yet I can't find something that tells me the right answer, and so now I need to potentially waste a lot of time trying to figure it out or trying to act given that lack of knowledge. Would be so much faster if someone just told me the right answer...").

-

- I later had a similar confusion with MongoDB I think (because Lucas, the CEO of Migaku, was discussing with me whether we should use MongoDB for some project). I vaguely remember reading this idea that if you wanted to query for all "documents" in a collection containing a key-value pair (e.g. all user documents that look like

{ _id: 123, is_verified: true, ... }), this query would "fetch" the entire document to check thatis_verifiedproperty (and so therefore, you wanted to be smart about structuring your data to avoid querying excess data? But then I guess there was also the denormalization idea where you want to group data that you plan to query together?). Again these ideas don't confuse me anymore, but as a beginner it felt so hard to get clarity on what felt like relatively simple questions on how to best structure the schema and what types of applications/data are document-based databases well-suited for.

SQL

- I vaguely remember struggling in the early days of learning SQL. I think Lucas (the CEO of Migaku) suggested that we use it for the Migaku Experiment Platform, so I needed to learn it. I didn't have too much trouble learning the basics, but when I wanted to answer certain types of questions using our data + schema, I didn't know what the "best" query would be to do that. I could come up with queries and/or data transformations in code to get the answers I wanted, but I wasn't confident it was the "best" solution (in terms of performance mainly i.e. "Is there a faster, more elegant way to express this as a single SQL query? Or do I have no choice but to do some processing in code after retrieving the query result? Who knows!"), and I couldn't find clear answers online explaining what the best solution would be. And fair enough I guess, since at a certain point, your situation is too specific to get tailored advice from Google searches maybe, but...

- Thoughts like "is this the correct way (and/or a "good" way) to do things? How would I know? I've never worked on a real project at a real company...".

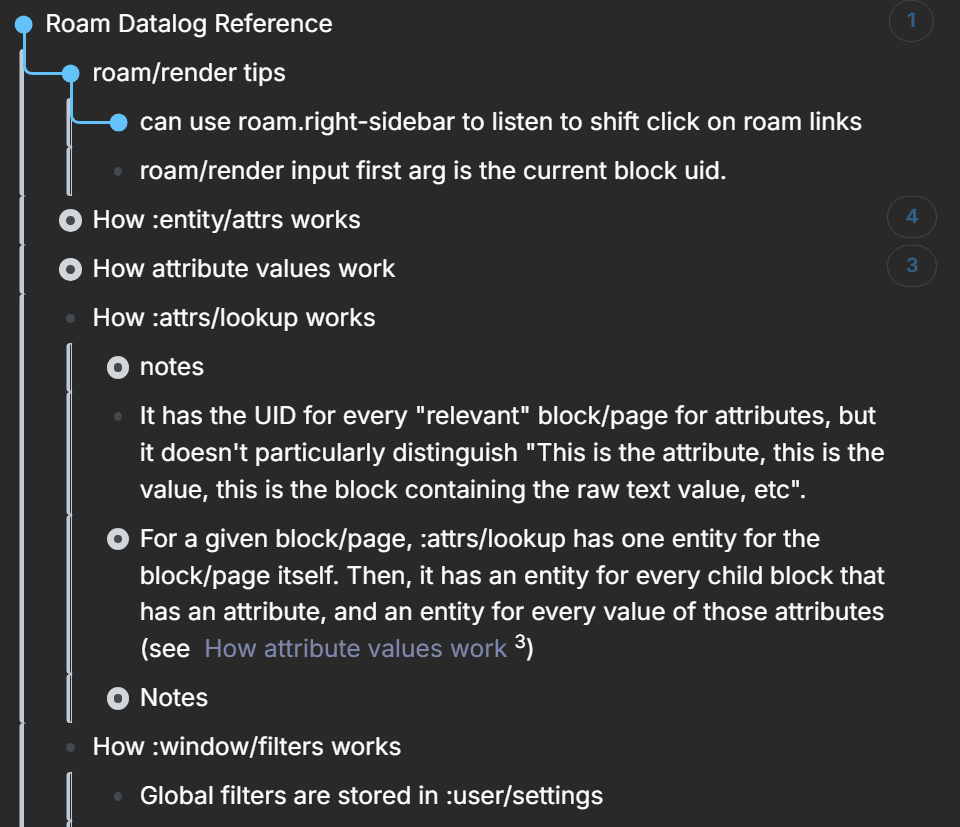

Datascript

- Roam Research uses Datascript for storing graph state, so I had to learn it to create more powerful queries and extensions. I ran into problems similar to the ones I ran into with SQL. I knew in theory how to do everything, but I didn't know with some certainty what the best practices were for certain types of queries or problems.

- like what WOULD be the best way to filter blocks by attributes given the structure of the data (that I can't modify directly, since it's Roam's database schema)

- I remember having to figure out a lot of things experimentally while working on a Roam Research query language.

- I vaguely remember figuring out experimentally that executing multiple queries and diffing/transforming those results in code was way faster than trying to construct a single, large query to produce (mostly) the final result.

- (I guess it makes some sense purely through the lens of latency; part of the reason people may want to avoid multiple database queries is because these queries usually happen over the network, but Datascript is an in-memory database)

- If I recall, I did a lot of experimentation to come up with this contraption to performantly query Roam Research attributes.

- I vaguely remember figuring out experimentally that executing multiple queries and diffing/transforming those results in code was way faster than trying to construct a single, large query to produce (mostly) the final result.

(def attr-values-rule

'[(attr-values ?block ?attr-eid ?v ?is-single-value ?parse-one-line-attr ?extract-attr-values ?eid->block-refs)

[?block :attrs/lookup ?attr-block]

[?attr-block :block/refs ?attr-eid]

[?attr-block :block/string ?attr-string]

[(?is-single-value ?attr-string) ?single-value]

(or-join

[?attr-block ?attr-eid ?v ?attr-string ?single-value ?parse-one-line-attr ?extract-attr-values ?eid->block-refs]

; One-liner attribute

(and [(true? ?single-value)]

[(?parse-one-line-attr ?attr-string) [_ ?v]]

[(?eid->block-refs ?attr-block) ?refs]

[(?extract-attr-values ?v ?attr-eid ?refs) ?v])

; Multi-value attribute

(and (not [(true? ?single-value)])

[?attr-block :block/children ?children]

[?children :block/string ?v]

; Ignore empty blocks (even though Roam adds them to :attrs/lookup)

(not [(re-matches #"^\s*$" ?v)])

[(?eid->block-refs ?children) ?refs]

[(?extract-attr-values ?v ?attr-eid ?refs) ?v]))

[(ground ?v) [?v ...]]])

-

I had to do a lot of digging to figure out how to recreate the results from Roam's native

{{query}}widget. -

But again, a lot of this work felt like a waste of time - if only I could just ASK Nikita (nikitonsky) or someone else how to do certain queries optimally (without needing to run benchmarks myself to re-derive best practices) or have these topics more clearly described in the documentation.

-

Some questions/topics I couldn't get clarity on (based on notes I found scattered in my Roam graph)

- Does Datascript re-run assignment clauses of constants for every block in DB? (e.g. defining a regex inline with re-pattern vs passing the object in as a query input)

- How do you even debug Datascript queries and get visibility into them?

Edit 04/23/2025: FFmpeg mentions in the AVFrame type definition (frame.h) that linesizes should be multiples of the CPU's alignment preference, and so I guess it would make sense to extrapolate and assume that sws_scale (which takes linesize values as a function parameter) also has this requirement, but... A single mention/reminder of this alignment requirement would go so far... I found a similar discussion on Stack Overflow with someone well-versed in FFmpeg, where he said:

I agree it may be useful to document it. You should submit a patch. :-). As for why we haven't documented it, you have to understand that multimedia literally has trillions of special-case rules or obnoxious behaviours like this, not because we - as ffmpeg developers - want to be irritating, but just because the technology is very non-uniform and requires special cases for all kind of situations to sort-of work. We can make it not work at all or document all special cases, but it would be more confusing. Know your tech stack. :-). - Ronald S. Bultje